If big business is doing it, why don’t you do it too? Learning how to scrape a website can help you find the best deal, gather leads for your business, and even help you find a new job.

Use a Web Scraping Service

The quickest and simplest way to gather data from the internet is to use a professional web scraping service. If you need to collect large amounts of data, a service like Scrapinghub might be a good fit. They provide a large scale, easy to use service for online data collection. If you are looking for something on a smaller scale, ParseHub is worth looking into to scrape a few websites. All users begin with a free 200-page plan, requiring no credit card, which can be built upon later through a tiered pricing system.

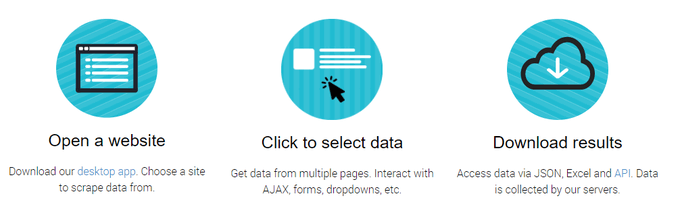

Web Scraping App

For a quick, free, and convenient way of scraping websites, the Web Scraper Chrome Extension is a great choice. There is a bit of a learning curve, but the developer has provided fantastic documentation and tutorial videos. Web Scraper is among the simplest and best tools for small scale data collection, offering more in its Free tier than most.

Use Microsoft Excel To Scrape a Website

For something a little more familiar, Microsoft Excel offers a basic web scraping feature. To try it out, open a new Excel workbook, and select the Data tab. Click From Web in the toolbar, and follow the instructions in the wizard to start the collection. From there, you have several options for saving the data into your spreadsheet. Check out our guide to web scraping with Excel for a full tutorial.

Use the Scrapy Python Library

If you are familiar with the Python programming language, Scrapy is the perfect library for you. It allows you to set up custom “spiders,” which crawl websites to extract information. You can then use the information gathered in your programs, or export it to a file. The Scrapy tutorial covers everything from basic web scraping through to professional level multi-spider scheduled information gathering. Learning how to use Scrapy to scrape a website isn’t just a useful skill for your own needs. Developers who know how to use Scrapy are in high demand, which could lead to a whole new career.

Use The Beautiful Soup Python Library

Beautiful Soup is a Python library for web scraping. It’s similar to Scrapy but has been around for much longer. Many users find Beautiful Soup easier to use than Scrapy. It’s not as fully featured as Scrapy, but for most use cases, it’s the perfect balance between functionality and ease of use for Python programmers.

Use a Web Scraping API

If you are comfortable writing your web scraping code yourself, you still need to run it locally. This is fine for small operations, but as your data collection scales up, it will use up precious bandwidth, potentially slowing down your network. Using a web scraping API can offload some of the work to a remote server, which you can access via code. This method has several options, including fully-featured and professionally priced options like Dexi, and simply stripped back services like ScraperAPI. Both cost money to use, but ScraperAPI offers 1000 free API calls before any payment to try the service before committing to it.

Use IFTTT To Scrape a Website

IFTTT is a powerful automation tool. You can use it to automate almost anything, including data collection and web scraping. One of the huge benefits of IFTTT is its integration with many web services. A basic example using Twitter could look something like this:

Sign in to IFTTT and select CreateSelect Twitter on the service menuSelect New Search From TweetEnter a search term or hashtag, and click Create TriggerChoose Google Sheets as your action serviceSelect Add Row to Spreadsheet and follow the stepsClick Create Action

In just a few short steps, you have created an automatic service that will document tweets connected to a search term or hashtag and the username with the time they posted. With so many options for connecting online services, IFTTT, or one of its alternatives is the perfect tool for simple data collection by scraping websites.

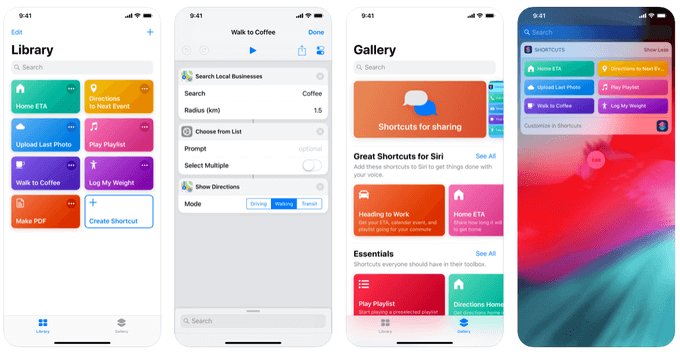

Web Scraping With The Siri Shortcuts App

For iOS users, the Shortcuts app is a great tool for linking and automating your digital life. While you might be familiar with its integration between your calendar, contacts, and maps, it is capable of much more. In a detailed post, Reddit user u/keveridge outlines how to use regular expressions with the Shortcuts app to get detailed information from websites. Regular Expressions allow much more fine-grain searching and can work across multiple files to return only the information you need.

Use Tasker for Android To Search The Web

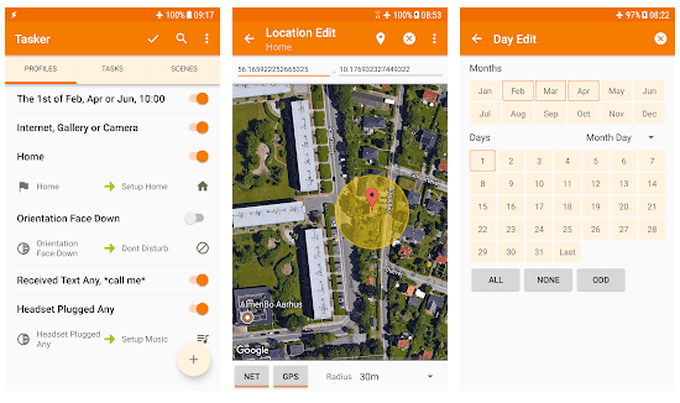

If you are an Android user, there are no simple options to scrape a website. You can use the IFTTT app with the steps outlined above, but Tasker might be a better fit. Available for $3.50 on the Play Store, many view Tasker as IFTTT’s older sibling. It has a vast array of options for automation. These include custom web searches, alerts when data on selected websites changes, and the ability to download content from Twitter. While not a traditional web scraping method, automation apps can provide much of the same functionality as professional web scraping tools without needing to learn how to code or pay for an online data gathering service.

Automated Web Scraping

Whether you want to gather information for your business or make your life more convenient, web scraping is a skill worth learning. The information you gather, once properly sorted, will give you much greater insight into the things that interest you, your friends, and your business clients.